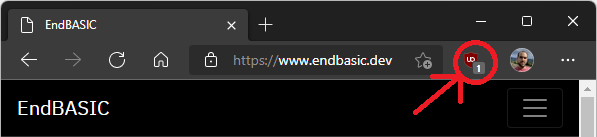

One thing that had been bothering me about my websites—including this blog, but especially when launching https://www.endbasic.dev/ just a few months ago—was this:

A tracking warning. uBlock complained that my properties had one tracker. And it’s true they had just one: it was Google Analytics, or GA for short.

GA is the de-facto standard for web analytics: it is extremely powerful and ubiquitous. However, GA has grown exceedingly complicated, installs cookies (thus requiring the utterly annoying cookie warning in the EU), has issues with the GDPR, and, depending on how you look at it, is also very privacy invasive.

I wanted the warning from uBlock to go away if at all possible—which may not be because I like web analytics. I want to know how people reach the websites that I run, what content they find interesting, and where they come from. I don’t particularly care about much more than that. I especially do not care about who you are specifically or what you do outside of this property. So I wanted an alterative to GA: an alterative that I fully understood so that, if it had to trigger a privacy warning, I could back it up.

So. Did I research the web for more privacy-friendly alternative services? Pffft, no, of course not. Did I roll up my sleeves and create my own? You bet! Otherwise you wouldn’t be reading this now 🙃

TL;DR: Check out the dashboard for this site.

A blog on operating systems, programming languages, testing, build systems, my own software projects and even personal productivity. Specifics include FreeBSD, Linux, Rust, Bazel and EndBASIC.

Brief history

The launch of the https://www.endbasic.dev/ site back in July of 2021 coincided with the launch of the EndBASIC cloud service, which was the first REST service I built and deployed from scratch on a public cloud provider. At around that same time, I was reading a couple of articles about how much traffic GA never gets to see because of privacy blockers like uBlock. I was curious to know how that skewed my metrics. Had I been running my own web server, it would have been a simple matter of processing the raw logs… but I don’t, so that was not an option.

Meanwhile, the cloud service I wrote for EndBASIC contained all the foundational code I needed to quickly build another one. And, given my very modest requirements for web analytics, I thought: “How hard would it be to repurpose that code to ingest data about page requests?” Plus, “If I had that running, I could also extend this blog with post-level voting features!”—something I lost when moving away from Medium and then dropping Disqus. I got to work and, within a couple of weeks, I had the initial version of my own analytics backend up and running. The codename? EndTRACKER, a homage to its roots.

With the backend service ready, I wired this blog to feed requests data into it, and also added the post-voting features that you can see at the bottom. Things seemed to work fine, but first: I wasn’t ready to drop GA just yet because I had insufficient data to prove that my new system was good enough; and, second, my system still required cookies so it seemed as if I could not remove the cookies warning anyway. At that point, I got bored? tired? overwhelmed? frustrated? and I stopped there. I left both GA and my own tracker up and running, and I never onboarded EndBASIC’s site.

Until now. About three weeks ago, I decided to finally go the last mile and build a UI for the service so I could look at the data that had already been collected for months. Running ad-hoc SQL queries before this was… interesting, but not fun. Building the first graph was very exciting because it seemed to match what GA had always shown, so I felt confident enough to finally ditch GA and the cookies warning for EU visitors.

Let’s look at how this works.

Overview

EndTRACKER is a small web service written in async Rust, deployed to Azure Functions, backed by a PostgreSQL database hosted on Azure Databases, and using Azure Maps for IP geolocation. The server code is about 4,000 SLoC (without counting the various dependencies, such as warp, sqlx, and reqwest) and the tests are around 3,500 SLoC. The deployed binary is about 10MB in size, which is not tiny but I think is pretty damn small for today’s standards.

Externally, EndTRACKER offers a REST API. This API has the following endpoints, all keyed on a site ID:

Page view tracking, offered as a

GETAPI for browsers without JavaScript (akin to the same information you would get from server logs) and a slightly richerPUTAPI for browsers with JavaScript.Vote interactions, which includes APIs to vote on a page, reset the vote on a page, and query the vote counts of a page.

Analytics queries, offered as a flexible

GETAPI to retrieve various statistics during different time frames (e.g. long-term historical data vs. real-time data).

Internally, EndTRACKER follows a traditional three-layered design:

The frontend layer implements the REST layer, which is purely about deserializing HTTP requests and serializing their responses. The frontend is stateless.

The driver layer implements the business logic for every REST API. For most APIs, the driver doesn’t do much more than pass the request to the database, but for others it has to do more work, such as coordinating various database queries or talking to Azure Maps. The driver is stateful because it is where the various in-memory caches live.

The database layer implements a thin transactional interface with two alternative backends: PostgreSQL and SQLite. I’ll get to why in the testing section below.

The UI is written in very rudimentary JavaScript, uses Chart.js for graph rendering, and is bundled and integrated into this site by Hugo.

Analytics

As mentioned earlier, my needs for analytics are very limited. I think I’d be fine generating statistics based on raw web server logs (like GoAccess does), but I do not have access to those and I don’t fancy running my own web server. (Spoiler alert: it’d have been much easier to do that.)

Here is what is collected:

The page you are visiting, the IP you are coming from, your User Agent, and the referrer (where you are coming from). These are details that are available in the logs of every web server you contact and I wanted to collect no more than this, if possible.

The country you are visiting from as derived from the request’s IP. I had to build this feature to detect when I had to show cookie warnings, but otherwise is uninteresting information.

A client ID, derived from your browser fingerprint and stored in a cookie if you are not in the EU. In my opinion, this is the most controversial part of the whole thing and am wondering if it could be scraped in favor of some pseudo-unique identifier computed on the server. The only caveat is that this ID is used to prevent double-voting in a crude way… so… it would require a minimum level of accuracy.Update (2022-02-15): There is no more client-side fingerprinting nor cookie usage at all. The only thing the server does now is generate a pseudo-unique ID for the client (a hash of the IP, user agent, and site ID to make the ID untraceable across sites) which is used to compute visitors vs. page views and to protect against abuse in votes and comments. There is a definite loss in accuracy, but these features only require reasonable precision during a window of a few days—so this approach is good enough for me but infinitely more privacy-respecting.

Based on the above details, which are stored in a very simple, always-append table in the SQL database, the server provides all of the logic to compute the following analytics:

Page views, visitors, and returning visitors as a daily count. I like looking at these lines when publishing new posts.

Top N referrers. Great to know where conversations about my posts are happening and join them while they are live.

Top N pages by views. This is useful to know what people find interesting and “optimize” those posts.

Top N pages by total number of vote changes (“engagement”). Also useful to know what people find interesting or what’s controversial.

Top N countries of origin. Not useful to me but had to be built anyway as detailed earlier.

All of these metrics are computed for a given time window with daily granularity. A late addition to the system was the “real-time” view, which offers these same metrics with a 1-minute granularity over the last 30 minutes or so and that the client polls to update the view. This is useful immediately after sharing a post.

These analytics are currently computed on demand, without any preprocessing (but with some in-memory caching).

Scalability

Which brings us to scalability. Computing analytics on demand does not scale if you want a snappy client.

For example, computing returning visitors is inherently expensive because it requires a full view of the past, and querying that for a site with a large history would not be cheap. In my case, this is not a problem because the history is still short and, well, I do not handle that much traffic. But for peace of mind, I restricted this computation to only account for a few days in the past—not the full history. A bit of an accuracy loss indeed, but at least it’s an upper bound in the cost of the queries over time.

Like this example, there are various other design issues in the system that wouldn’t scale with many users and a lot of traffic. I think the data ingestion path would scale fairly well as it is now, but not the analytics. Which is a fine trade off: the number of viewers of the data (1) is infinitely smaller than the number of site visitors (~100s).

Plus remember that I’m designing for my use case, which is to keep the whole thing as simple as possible. No pre-computation means no background jobs and no data consistency worries. Yes, it is super fun to think about all the details that would need to change (and how) to support an influx of traffic—but until that happens (if ever), these problems don’t need to be addressed.

Multi-tenancy

We can’t talk about a cloud service without using fancy words such as “multi-tenancy”. The EndTRACKER database schema and the frontend have been designed from day one to support tracking multiple sites because I required this feature.

Each site has a unique identifier and contains a list of base URLs that are “owned” by that site. Supporting more than one base URL for a site is important for cases like the EndBASIC project, which has a www. subdomain for the front page and a repl. subdomain for the web-based interpreter. The initial version of the system only supported one base URL, but I had to extend this just last week to finally allow onboarding EndBASIC.

The design leaves room to adding ACLs on each site (say to control who can access analytics), but I haven’t needed that yet so it’s not implemented. In fact, that’s part of why the analytics for this site are publicly available: because I haven’t built the ACLs! But even if I had built them, I’d have shared access anyway to give you an idea of how things look.

Testing

By far, the most annoying thing to do when developing this service is dealing with its unit tests: they are overly verbose and deserve to be simplified. As mentioned above, the test collateral for EndTRACKER is 3,500 SLoC, which is almost as big as the 4,000 SLoC of production code. These account for a total of 130 tests. Every REST API, driver function, and database query is accompanied by tests.

As annoying as writing these might be, there is no way I would go without writing tests even for a side toy project. For one, the tests have already saved me from obvious bugs in the SQL queries. For another, extensive test coverage is critical for quick iteration. I’ve been able to prototype and refactor this service in place over and over again to add new features because I have enough test coverage. Otherwise, many of the latest features I had to add to support generating analytics would have taken much longer—and I’d probably have broken something along the way.

As for the runtime cost of these, a cargo test invocation takes 250ms on my old Mac Pro 2013. That’s right: running all the tests is incredibly fast. But, how is that possible when a database is involved? Well, of the 130 tests above, there are 23 that validate the database against SQLite and 23 that do the same for PostgreSQL, and all tests for the driver and the REST layer go through the SQLite backend. And you guessed right: those 23 tests against PostgreSQL do not run all the time.

Now, of course, running the tests against SQLite doesn’t provide sufficient confidence that the integrated product works. The PostgreSQL tests must run. For that reason, there is a GitHub Action that runs all tests, including these slower ones, every day and for every PR merge.

But how costly are the real tests anyway? For comparison, a cargo test invocation with all tests enabled takes ~3 seconds when talking to my home server and ~30 seconds when talking to a test database on Azure from my home connection. That’s an 8x and 80x slowdown, respectively, so not something to take lightly.

The best thing about this setup is that it is simple. There is no Docker involved, no complex local database configuration, nothing. cargo test or git push to a temporary branch and I am good.

But why?

Finally, we get to the question: why have I wasted time on this, just like I have done in EndBASIC?

Because why not? No, really, why not? That’s the primary reason for this project. I found it would be cool to write and deploy this type of service and pave the way to enriching my blog, so I did.

But there are other (better) reasons. To give you one: in the past, I have developed countless command line tools and I think I’ve gotten pretty good at it: I know what patterns work and do not, both from a usability perspective and from a code organization point of view. But I don’t have as much practice in other areas, and practice makes perfect.

While I have made this service work, I’m aware that there are many rough edges in the internal design and even in the public APIs that need to be addressed—so it has been a great learning exercise. For example, passing a message body in a GET request is something that came back to bit me and this was just coincidentally covered in a separate article yesterday.

Are you in?

If you want privacy-friendly, production-ready alternatives to GA, there are plenty of options to choose from. Plausible Analytics is the one that stood out to me, and so did GoAccess. I have no plans right now to launch yet another public service in this space.

Or do I? I’ve been pondering the idea of turning this service into “a great companion for a static site” to bring features that are commonly associated with non-static CMSs. These would include the existing voting abilities but also others such as dynamic lists of posts, email subscription handling, support for an email-based contact form, or even a simple commenting system. These are all features that I want for this blog, and maybe they can be bundled in a single package from which you cherry-pick from.

So I am curious: Would you use this system as it is today? If so, why would you find it interesting over any of the other options? What could be its appeal? Let me know!

And, if you haven’t done so yet, take a look at the public dashboard and/or read the privacy policy. Thanks for stopping by!