Code reviews have a bad rep: they are antagonistic in nature and, sometimes, pure red tape. Some argue that they are bad practice; others say that peer programming is better. And while these may be true, I want to tell you a story about a case where code reviews worked well!

Meet X: a junior engineer in the Bazel team circa 2018, tasked to implement two features: A and B. As you may know, Google is big into code reviews—and their tooling for this is awesome; believe me—so this was the standard process for X to get his code checked in.

For Feature A, X was working with two engineers in his same office: Y and Z. Engineer Y was a junior engineer as well but with more coding experience than X. Y wrote code really fast and didn’t like process. Y rubber-stamped long code reviews with little care about the details.

Engineer Z was a high-level tech lead in the team. Z had a lot of experience and knew the product top-to-bottom, but didn’t have much time for code reviews. Worse yet, Z didn’t have enough spare time to closely mentor X or Y.

For Feature B, X was working with me, who was 6000 km away and with a 6-hour time difference between us. I was known for tough code reviews. I also pushed for breaking large changes into smaller commits, because it is impossible to do a thorough review otherwise.

And I also pushed for writing unit and integration tests in each change, guiding X into a good testing approach for feature B at each small step. You might say that this is important but isn’t part of a code review, but this is where the issues surfaced.

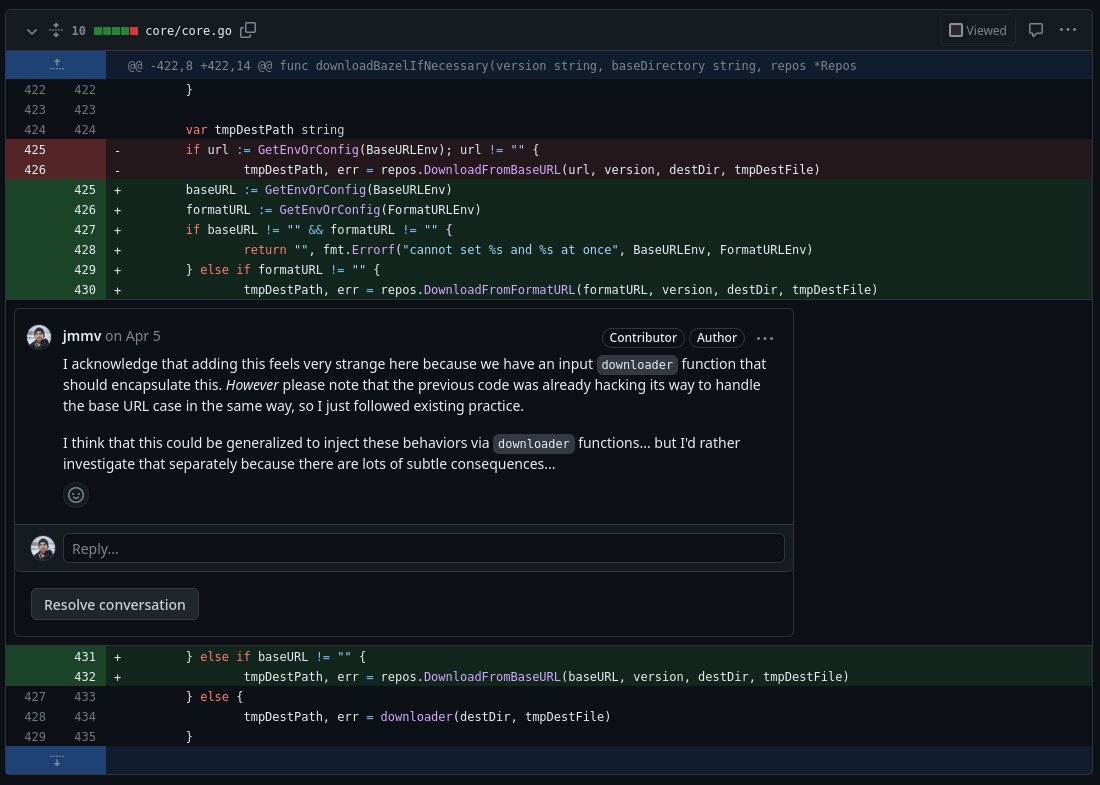

My review comments were copious, so I classified them in three categories: nits, which were inconsequential style changes; optional suggestions, which X could ignore; and important comments, which required X to change code or for us to have a detailed discussion.

Importantly, I always justified my review comments, especially if they were personal opinions or optional suggestions. Most of the time, X took my advice and addressed nits and optional suggestions, because he was convinced by my explanations.

Other times, X pushed back. Maybe I hadn’t understood the change or maybe I was just simply wrong. These cases needed further discussion and often resulted in side 1:1 conversations or even the request to write a mini design doc to flush out unclear ideas.

The time to ship came. For context, Bazel used to be released once every two weeks within Google. New features were gated behind feature flags, which minimized risk in the release process. And if something went wrong, the two-week release cadence allowed addressing bugs quickly.

Feature A was ready before feature B… or was it? The overhead of the reviews in A had been minimal so the code shipped quickly. However… feature A did not work. X needed multiple release cycles to address bugs, all under pressure because the feature “had already launched”.

Feature B, on the other hand… took longer to ship. But once it did, it worked on the first try. Sure, it wasn’t perfect and needed some iteration to address small issues, but the bulk of the code just worked. This made X proud and understood why I had been thorough.

To this day, X still remembers shipping these two features. He has told the story above multiple times and praised the process we followed for feature B. For me, it was mostly “business as usual” and didn’t really notice the (positive) impact it had on him.

X also claims to have learned a lot just by reading my comments, thinking about them, and having to do the tricky work to write small, well-tested changes. And you know what? He has recently earned the title of CTO at an exciting startup.

Anyhow. I realize this whole story shows flaws in various places. Z should probably have paid more attention to X and Y. Maybe X should have written more-detailed design docs upfront. Maybe we should all have pair-programmed. No team is perfect.

But code reviews do work. Yes, they can be problematic, but I don’t think that code reviews are intrinsically antagonistic: it’s the people involved. Code reviews are just a tool to achieve the goals of quality and mentoring, and as a tool, it has to be used wisely.

And one last thing: code reviews are truly async-friendly. This may be why they are popular in open source, and in this particular case, they truly helped X and I due to the physical distance between us. Q.E.D.