Imagine this scenario: your team uses Bazel for fast, distributed C++ builds. A developer builds a change on their workstation, all tests pass, and the change is merged. The CI system picks it up, gets a cache hit from the developer’s build, and produces a release artifact. Everything looks green. But when you deploy to production, the service crashes with a mysterious error: version 'GLIBC_2.28' not found. What went wrong?

The answer lies in the subtle but dangerous interaction between Bazel’s caching, remote execution, and differing glibc versions across your fleet. In previous posts in this series, I’ve covered the fundamentals of action non-determinism, remote caching, and execution execution. Now, finally, we’ll build on those to tackle this specific problem.

This article dives deep into how glibc versions can break build reproducibility and presents several ways to fix it—from an interesting hack (which spawned this whole series) to the ultimate, most robust solution.

The scenario

Suppose you have a pretty standard (corporate?) development environment like the following:

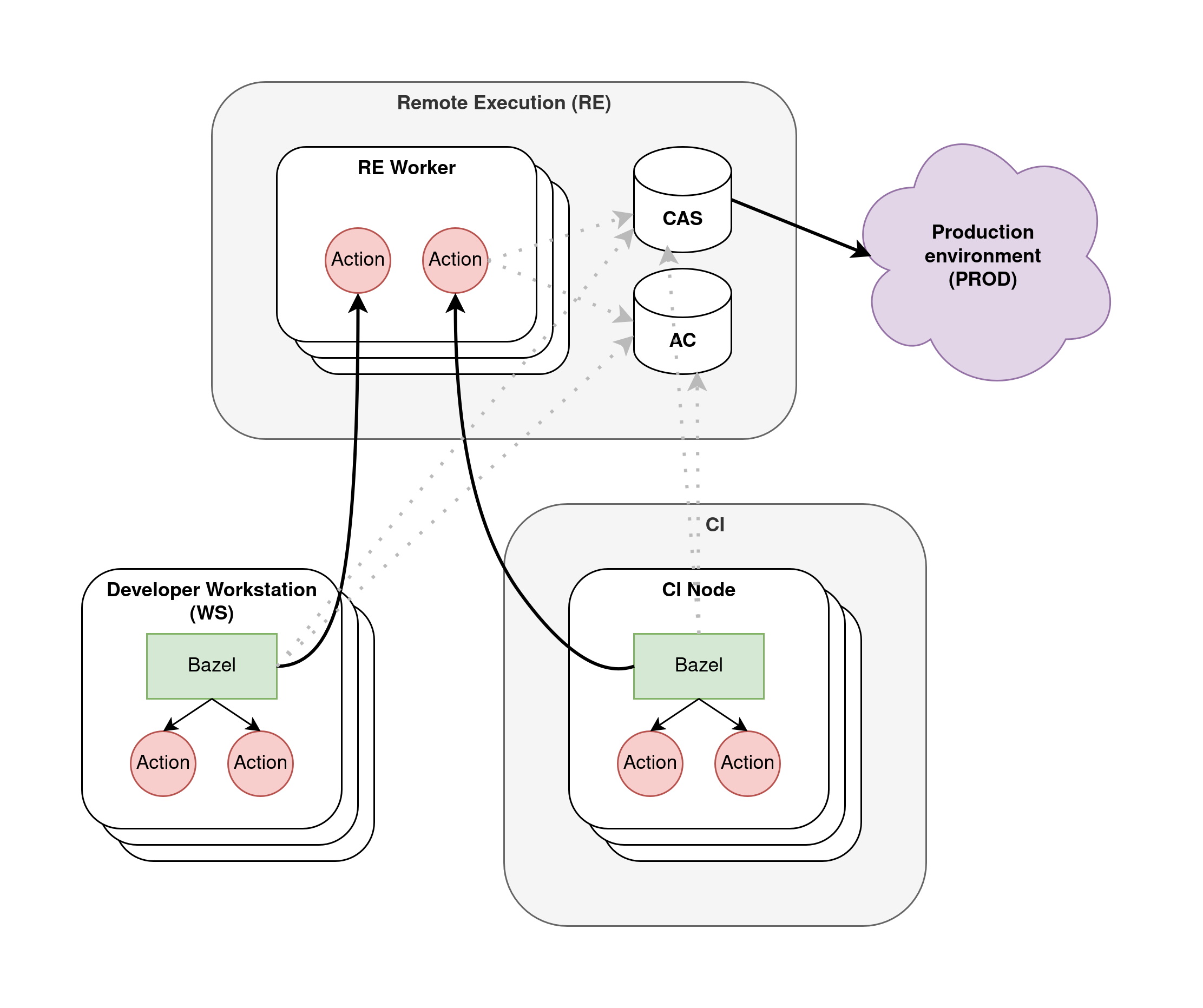

Developer workstations (WS). This is where Bazel runs during daily development, and Bazel can execute build actions both locally and remotely.

A CI system. This is a distributed cluster of machines that run jobs, including PR merge validation and production release builds. These jobs execute Bazel too, who in turn executes build actions both locally and remotely.

The remote execution (RE) system. This is a distributed cluster of worker machines that execute individual Bazel build actions remotely. The key components we want to focus on today are the AC, the CAS, and the workers—all of which I covered in detail in the previous two articles.

The production environment (PROD). This is where you deploy binary artifacts to serve your users. No build actions run here.

All of the systems above run some version of Linux, and it is tempting to wish to keep such version in sync across them all. The reasons would include keeping operations simpler and ensuring that build actions can run consistently no matter where they are executed.

However, this wish is misguided and plain impossible. It is misguided because you may not want to run the same Linux distribution on all three environments: after all, the desktop distribution you run on WS may not be the best choice for RE workers, CI nodes, nor production. And it is plain impossible because, even if you aligned versions to the dot, you would need to take upgrades at some point: distributed upgrades must be rolled out over a period of time (weeks or even months) for reliability, so you’d have to deal with version skew anyway.

To make matters more complicated, the remote AC is writable from all of WS, CI, and RE to maximize Bazel cache hits and optimize build times. This goes against best security practices (so there are mitigations in place to protect PROD), but it’s a necessity to support an ongoing onboarding into Bazel and RE.

The problem

The question becomes: can the Linux version skew among all machines involved cause problems with remote caching?

It sure can because C and C++ build actions tend to pick up system-level dependencies in a way that Bazel is unaware of (by default), and those influence the output the actions produce. Here, look at this:

$ nm prod-binary | grep GLIBC_2.28

U mtx_init@@GLIBC_2.28

$ █

The version of glibc leaks into binaries and this is invisible to Bazel’s C/C++ action keys. glibc versions its symbols to provide runtime backwards compatibility when their internal details change, and this means that binaries built against newer glibc versions may not run on systems with older glibc versions.

How is this a problem though? Let’s take a look by making the problem specific. Consider the following environment:

| Environment | Purpose | glibc version |

|---|---|---|

| WS | Developer workstations | 2.28 |

| CI-1 | CI production environment | 2.17 |

| CI-2 | CI staging environment | 2.28 |

| RE | Shared RE cluster | 2.17 |

| PROD | Production deployments | 2.17 |

In this environment, developers run Bazel in WS for their day-to-day work, and CI-1 runs Bazel to support development flows (PR merge-time checks) and to produce binaries for PROD. CI-2 sometimes runs builds too. All of these systems can write to the AC that lives in RE.

As it so happens, one of the C++ actions involved in the build of prod-binary, say //base:my_lib, has a local tag which forces the action to bypass remote execution. This can lead to the following sequence of events:

A developer runs a build on a WS.

//base:my_libhas changed so it is rebuilt on the WS. The action uses the C++ compiler, so the object files it produces pick up the dependency on glibc 2.28. The result of the action is injected into the remote cache.CI-1 schedules a job to build

prod-binaryfor release. This job runs Bazel on a machine with glibc 2.17 and leverages the RE cluster which also contain glibc 2.17. Many C++ actions get rebuilt but//base:my_libis reused from the cache. The production artifact now has a dependency on symbols from glibc 2.28.Release engineering picks the output of CI-1, deploys the production binary to PROD, and… boom, PROD explodes:

./prod-binary: /lib64/libc.so.6: version `GLIBC_2.28' not found (required by ./prod-binary)

The fact that the developer WS could write to the AC is very problematic on its own, but we could encounter this same scenario if we first ran the production build on CI-2 for testing purposes and then reran it on CI-1 to generate the final artifact.

So, what do we do now? In a default Bazel configuration, C and C++ action keys are underspecified and can lead us to non-deterministic behavior when we have a mixture of host systems compiling them.

Solution A: manually partition the AC

Let’s start with the case where you aren’t yet ready to strictly restrict writes to the AC from RE workers, yet you want to prevent obvious mistakes that lead to production breaks.

The idea here is to capture the glibc version that is used in the local and remote environments, pick the higher of the two, and make that version number an input to the C/C++ toolchain. This causes the version to become part of the cache keys and should prevent the majority of the mistakes we may see.

WARNING: This is The Hack I recently implemented and that drove me to writing this article series! Prefer the options presented later, but know that you have this one up your sleeve if you must mitigate problems quickly.

To implement this hack, the first thing we have to do is capture the local glibc version. We can do this with:

genrule(

name = "glibc-local-version",

outs = ["glibc-local-version.txt"],

cmd = "grep GNU_LIBC_VERSION bazel-out/stable-status.txt \

| cut -d ' ' -f 2- >$@",

stamp = True,

tags = ["sandboxed"],

)

One important tidbit here is the use of the stable-status.txt file, indirectly via the requirement of stamping. This is necessary to force this action to rerun on every build because we don’t want to hit the case of using an old bazel-out tree against an upgraded system. As a consequence, we need to modify the script pointed at by --workspace_status_command script (you have one, right?) to emit the glibc version:

echo "STABLE_GNU_LIBC_VERSION $(getconf GNU_LIBC_VERSION)"

The second thing we have to do is capture the remote glibc version. This is… trickier because there is no tag to force Bazel to run an action remotely. Even if we assume remote execution, features like the dynamic spawn strategy or the remote local fallback could cause the action to run locally at random. To prevent problems, we have to detect whether the action is running within RE workers or not, and the way to do that will depend on your environment:

genrule(

name = "glibc-remote-version",

outs = ["glibc-remote-version.txt"],

cmd = """

if [ ! -e /etc/remote-worker.cookie ]; then

# Trick dynamic scheduling (when it is enabled) into

# preferring to run the action remotely.

#

# You have two choices here: fail hard, which makes

# this correct, or accept that this is a heuristic by

# making the failure unlikely with a sleep. You might

# want the sleep if you don't want build breakages

# when there is significant action queuing, for

# example.

exit 1

#sleep 60

fi

getconf GNU_LIBC_VERSION >$@

""",

)

The third part of the puzzle is to select the highest glibc version between the two that we collected. We can do this with the following genrule, leveraging sort’s -V flag to compare versions. This flag is a GNU extension… but we are talking about glibc anyway here so I’m not going to be bothered by it:

genrule(

name = "glibc-max-version",

srcs = [

":glibc-local-version",

":glibc-remote-version",

],

outs = ["glibc-max-version.txt"],

cmd = """

local="$$(cat $(location :glibc-local-version)

| cut -d ' ' -f 2)"

remote="$$(cat $(location :glibc-remote-version)

| cut -d ' ' -f 2)"

echo "Local glibc version: $${local}" 1>&2

echo "Remote glibc version: $${remote}" 1>&2

chosen="$$(printf "$$local\n$$remote\n"

| sort -rV | head -1)"

echo "$${chosen}" >$@

""",

)

And, finally, we can go to our C++ toolchain definition and modify it to depend on the glibc-max-version produced by the previous action:

filegroup(

name = "toolchain_files",

srcs = [

# ...

":glibc-max-version",

# ...

],

)

cc_toolchain(

name = "glibc_safe_toolchain",

all_files = toolchain_files,

# ...

)

Ta-da! All of our C/C++ actions now encode the highest possible glibc version that the outputs they produce may depend on. And, while not perfect, this is an easy workaround to guard against most mistakes.

But can we do better? Of course.

Solution B: restrict AC writes to RE only

Based on the previous articles, what we should think about is plugging the AC hole and forcing build actions to always run on the RE workers. In this way, we would precisely control the environment that generates action outputs and we should be good to go.

Unfortunately, we can still encounter problems! Remember how I said that, at some point, you will have to upgrade glibc versions? What happens when you are in the middle of a rolling upgrade to your RE workers? The worker pool will end up with different “partitions”, each with a different glibc version, and you will still run into this issue.

To handle this case, you would need to have different worker pools, one with the old glibc version and one with the new version, and then make the worker pool name be part of the action keys. You would then have to migrate from one pool to the other in a controlled manner. This would work well at the expense of reducing cache effectiveness, causing a a big toll on operations, and making the rollout risky because the switch from one pool to another is a all-or-nothing proposition.

Solution C: sysroots

The real solution comes in the form of sysroots. The idea is to install multiple parallel versions of glibc in all environments and then modify the Bazel C/C++ toolchain to explicitly use a specific one. In this way, the glibc version becomes part of the cache key and all build outputs are pinned to a deterministic glibc version. This allows us to roll out a new version slowly with a code change, pinning the version switch to a specific code commit that can be rolled back if necessary, and keeping the property of reproducible builds for older commits.

This is the solution outlined at the end of Picking glibc versions at runtime and is the only solution that can provide you 100% safety against the problem presented in this article. It is difficult to implement, though, because convincing GCC and clang to not use system-provided libraries is tricky and because this solution will sound alien to most of your peers.

What will you do?

The problem presented in this article is far from theoretical, but it’s often forgotten about because typical build environments don’t present significant skew across Linux versions. This means that facing new glibc symbols is unlikely, so the chances of ending up with binary-incompatible artifacts are low. But they can still happen, and they can happen at the worst possible moment.

Therefore, you need to take action. I’d strongly recommend that you go towards the sysroot solution because it’s the only one that’ll give you a stable path for years to come, but I also understand that it’s hard to implement. Therefore, take the solutions in the order I gave them to you: start with the hack to mitigate obvious problems, follow that up with securing the AC, and finally go down the sysroot rabbit hole.

As for the glibc 2.17 mentioned en-passing above, well, it is ancient by today standards at 13 years of age, but it is what triggered this article in the first place. glibc 2.17 was kept alive for many years by the CentOS 7 distribution—an LTS system used as a core building block by companies and that reached EOL a year ago, causing headaches throughout the industry. Personally, I believe that relying on LTS distributions is a mistake that ends up costing more money/time than tracking a rolling release, but I’ll leave that controversial topic for a future opinion post.